Machine Learning from Human Preferences

Chapter 1: Introduction

Overview

Machine Learning from Human Preferences explores the challenge of efficiently and effectively eliciting preferences from individuals, groups, and societies and embedding them within AI systems.

Overview

We focus on statistical and conceptual foundations and strategies for interactively querying humans to elicit information that improves learning and applications.

Overview

This class is not exhaustive!

Overview

Feedback can be included at any step of training

- Slides modified from Diyi Yang.

Overview

Feedback-Update Taxonomy

| Dataset Update | Loss Function Update | Parameter Space Update | |

|---|---|---|---|

| Domain | Dataset modification, Augmentation, Preprocessing, Data generation from constraint, Fairness, weak supervision, Use unlabeled data, Check synthetic data | Constraint specification, Fairness, Interpretability, Resource constraints | Model editing, Rules, Weights, Model selection, Prior update, Complexity |

| Observation | Active data collection, Add data, Relabel data, Reweighting data, collecting expert labels, Passive observation | Constraint elicitation, Metric learning, Human representations, Collecting contextual information, Generative factors, concept representations, Feature attributions | Feature modification, Add/remove features, Engineering features |

- Slides modified from Diyi Yang.

Examples: Natural Languages

Examples: Natural Languages

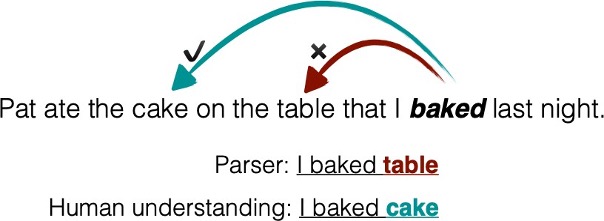

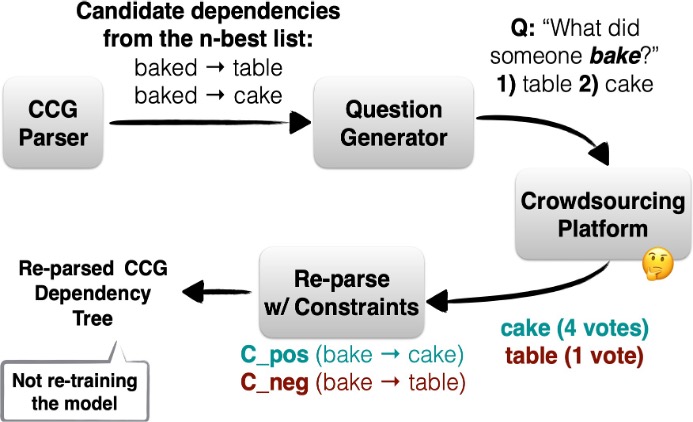

Builds on research studying human feedback in language

Harpale, Sarawagi, and Chakrabarti (2004)

Examples: Natural Languages

He et al. (2016)

Examples: Natural Languages

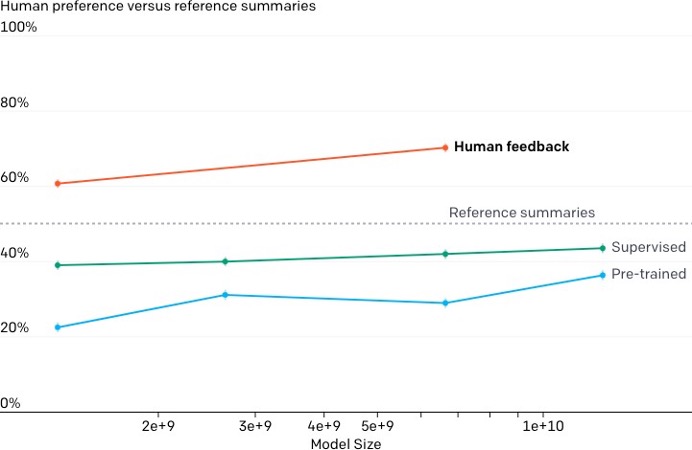

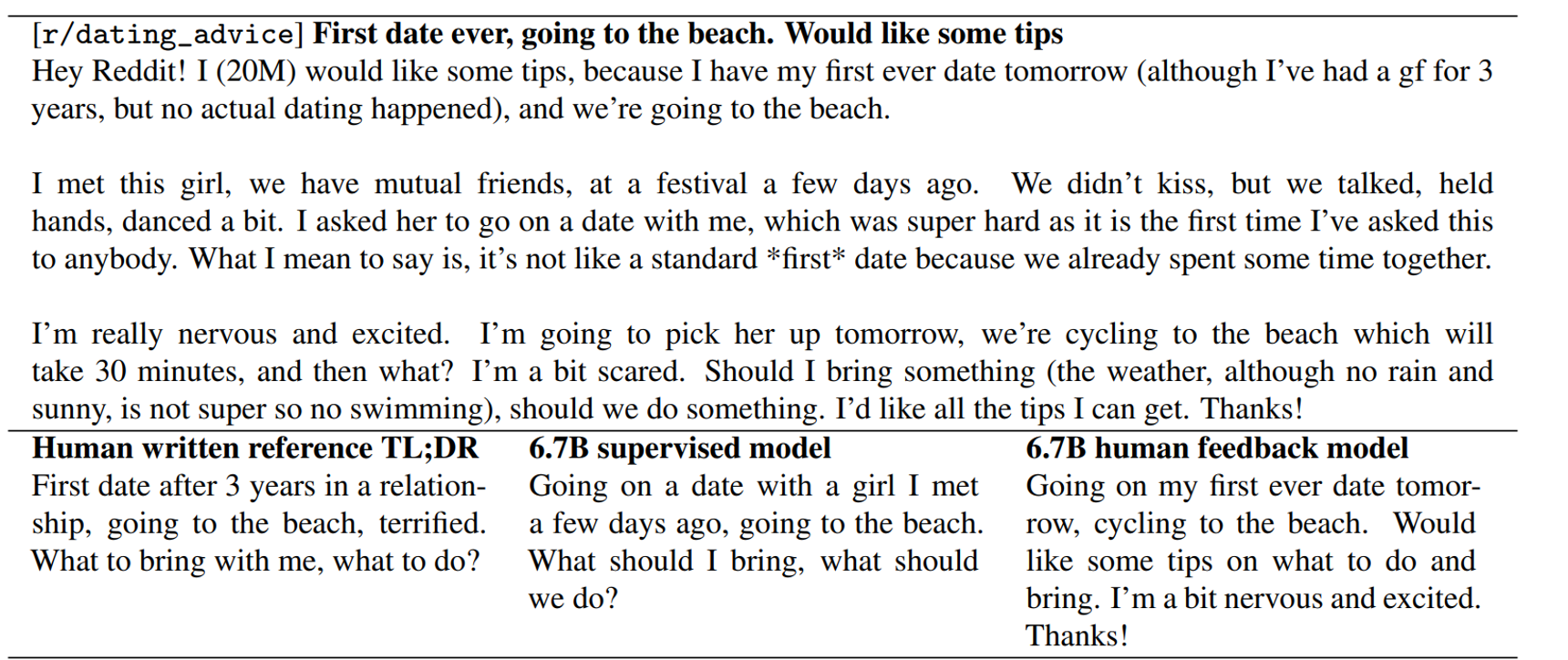

Ouyang et al. (2022)

Examples: Natural Languages

OpenAI Experiments with RLHF

Stiennon et al. (2020)

Motivation

- Provides a mechanism for gathering signals about correctness that are difficult to describe via data or cost functions, e.g., what does it mean to be funny?

- Provides signals best defined by stakeholders, e.g., helpfulness, fairness, safety training, and alignment.

- Useful when evaluation is easier than modeling ideal behavior.

- Sometimes, we do not care about human preferences per se; we care about fixing model mistakes.

Motivation

We have not figured out how to do it quite right, or we need new approaches

- Human preferences data reflects human biases, such as length and authoritative tone.

- Human preferences can be unreliable, e.g., reward hacking in RL.

Ethical Issues

- Labeling often depends on low-cost human labor

- The line between economic opportunity and employment is unclear

- May cause psychological issues for some workers

Ethical Issues

Santurkar et al. (2023)

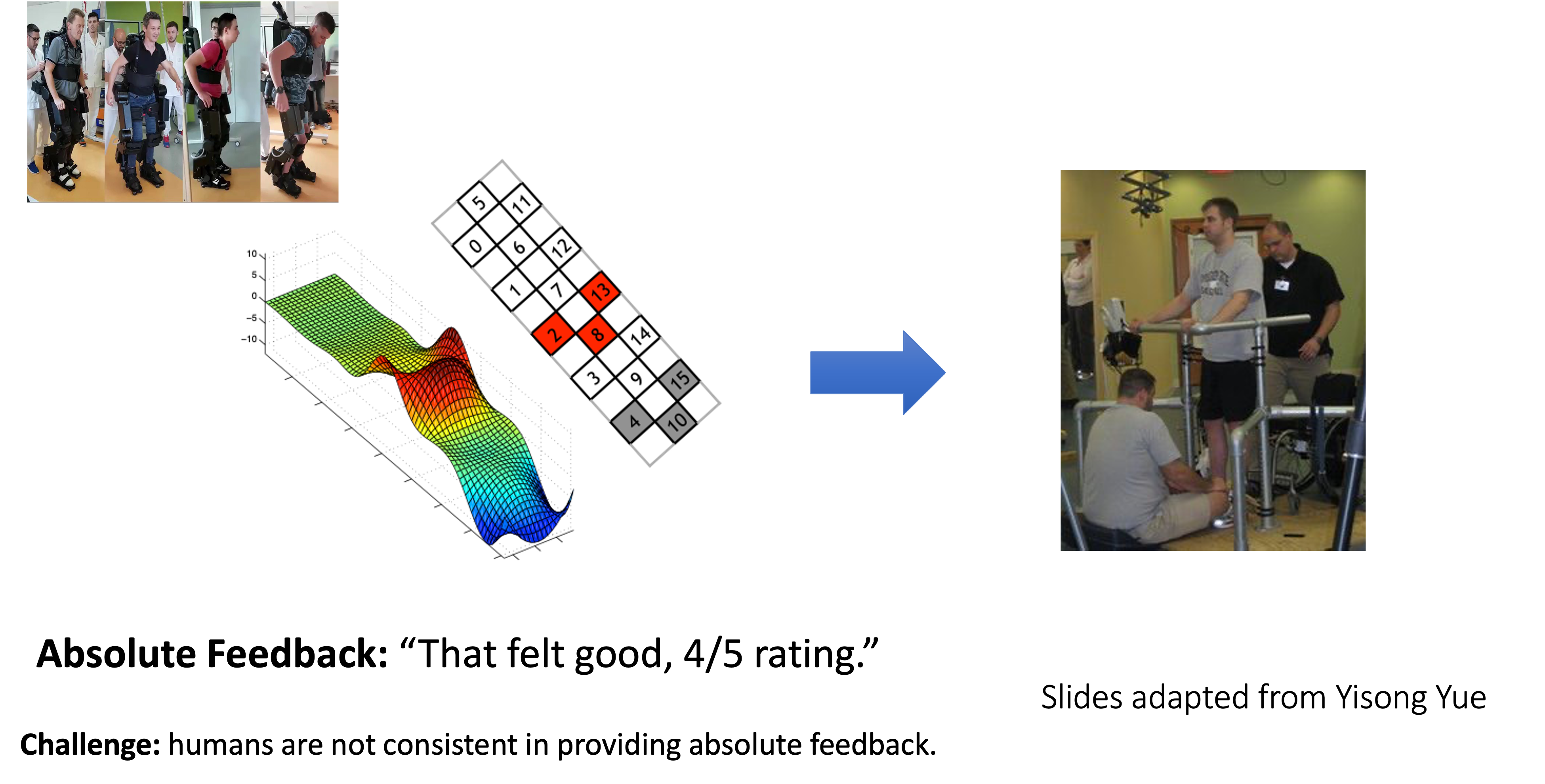

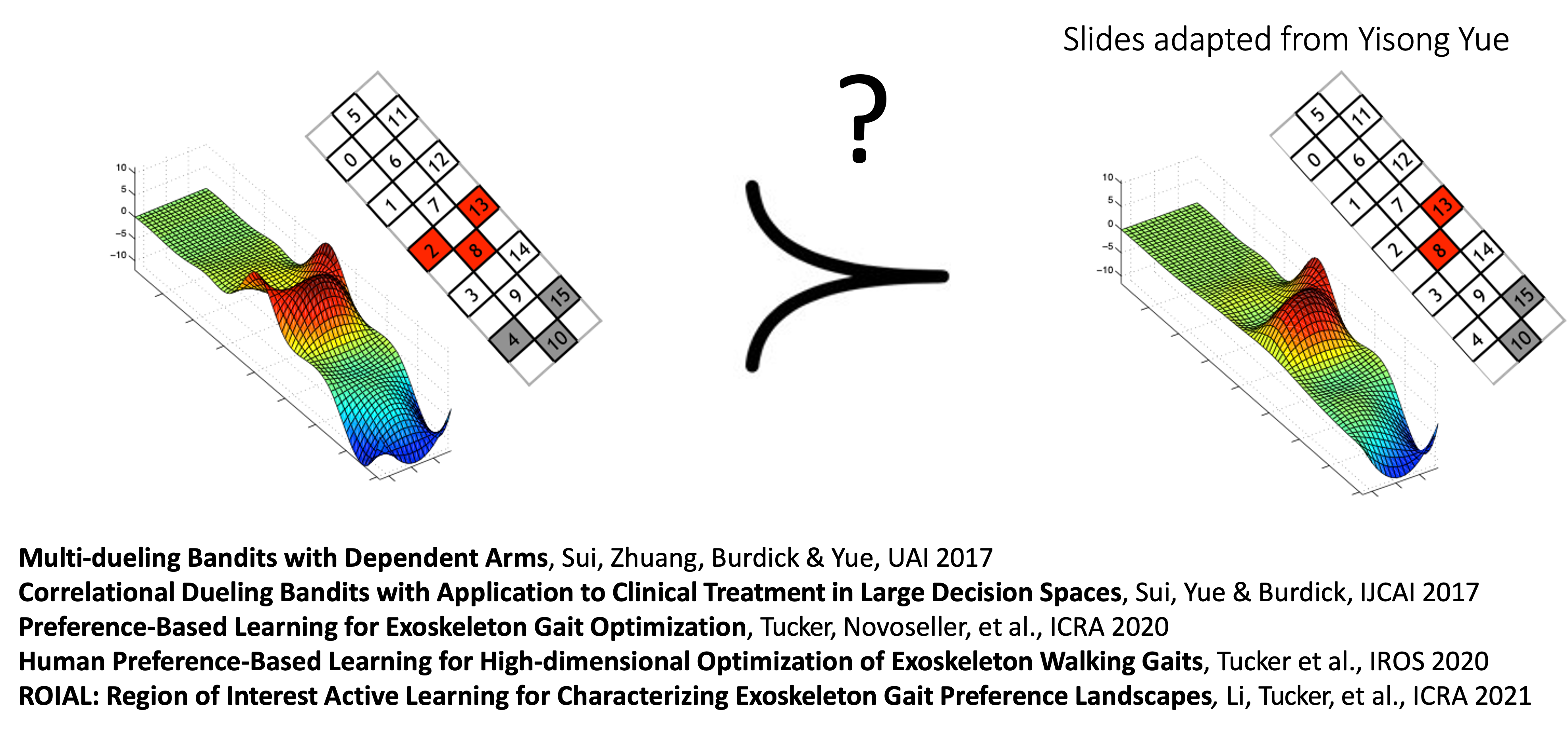

Examples: Dueling Bandits

Personalize therapy

Examples: Metric Elicitation

- Determine the fairness and performance metric by interacting with individual stakeholders (Hiranandani et al. 2019b, 2019a)

- Metric elicitation from stakeholder groups (Robertson, Hiranandani, and Koyejo 2023)

Examples: Metric Elicitation

Why elicit metric preferences?

Examples: Inverse RL

Robertson, Haupt, and Koyejo (2023)

Examples: Recommender Systems

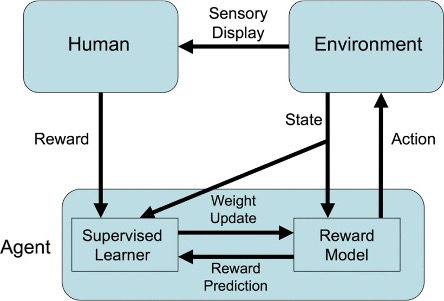

Examples: RLHF

Bradley Knox and Stone (2008)

Christiano et al. (2017)

Examples: Inverse RL

Flying helicopters using imitation learning and inverse reinforcement learning (IRL)

Coates, Abbeel, and Ng (2008)

Examples: Inverse RL

Biyik and Sadigh (2018)

Examples: Inverse RL

Biyik, Talati, and Sadigh (2022)

Examples: Inverse RL

Reward hacking

Tradeoffs

Design of tools for eliciting feedback from humans often has to tradeoff several factors

Key Assumptions & Discussion

Logistics: Course Goals

- Focus on breadth vs. depth

- Foundations: Judgement, decision making and choice, biases (psychology, marketing), discrete choice theory, mechanism design, choice aggregation (micro-economics), human-computer interaction, ethics

- Machine learning: Modeling, active learning, bandits

- Applications: recommender systems, language models, reinforcement learning, AI alignment

- Note: Schedule is tentative

Logistics: Prerequisites

CS 221, CS 229, or equivalent. You are expected to:

- Be proficient in Python and LaTeX. Most homework and projects will include a programming component.

- Be comfortable with machine learning concepts, such as train and test set, model fitting, function class, and loss functions.

Logistics: Books

Our textbook is available online at: mlhp.stanford.edu

Next Topics

Chapter 2: Human Decision Making and Choice Models

Welcome to CS329H!

Chapter 1: Introduction